About Us

- Indian Institute of Technology, Kanpur have two High Performance Computing Clusters named PARAM Sanganak and HPC2013.

- PARAM Sanganak sponsored by NSM (National Super-computing Mission) is available for use since March 2021.

- Institute Users can have account in any of the HPC facilities.

- Visit PARAM Sanganak for User Manual and application process.

- Visit HPC2013 for User Manual and application process.

- Online HPC Application and Reporting system can be accessed by clicking HARS Portal

- Application for Renewal/Fresh Account creation has started on October 26, 2024.

- Topup/recharge option is available for adding more core hours (recharge your account) to your Param Sanganak account.

- From August 1, 2024, we are enabling usage based computing on PARAM Sanganak.

- From August 1, 2024, new High-Priority Access facility is available in PARAM Sanganak for IIT Kanpur users.

- Charges for high-priority and regular-access are different.

- Accounting charges for PARAM Sanganak and HPC2013 are separate.

- We have organized a two day workshop on HPC Scalability (July 18-19, 2024). For details click here.

Research

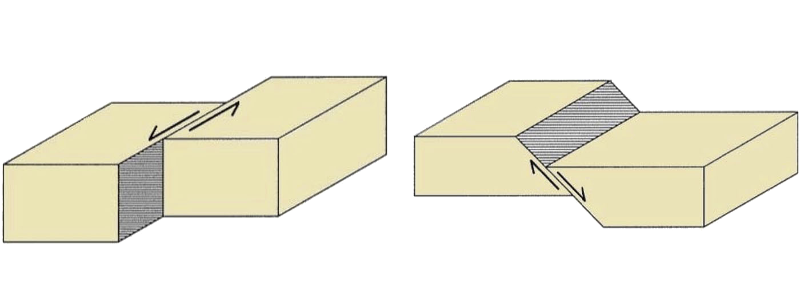

Tsunami Simulation

On 23rd January 2018, a 7.9 Mw earthquake occurred in the Gulf of Alaska within the Pacific-Plate but did not create tsunami (large and vigorous motion of sea). Whereas a similar event very close happened during 1964 caused massive tsunami. For the first time it was investigated using basic fluid equations. It was shown that the dip-slip event displays stronger receptivity than the strike-slip event.

Tapan Sengupta and team, Dept. of Aerospace Engineering

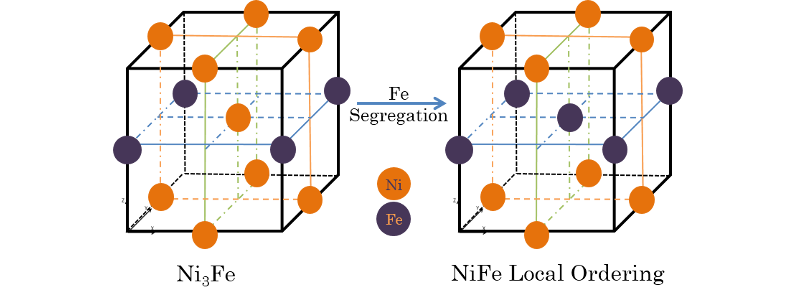

Pollution Control

This kind of catalyst can be used in exhaust pipes and chimney. Molecular simulation is used to understand bulk and surface structures and associated properties of materials relevant to engineering applications. Ni3Fe alloy is used as a catalyst to convert carbon dioxide to methane. The proposed theoretical models of such catalyzed reactions matches with experimental observarions.

Pankaj A. Apte and team, Dept. of Chemical Engineering

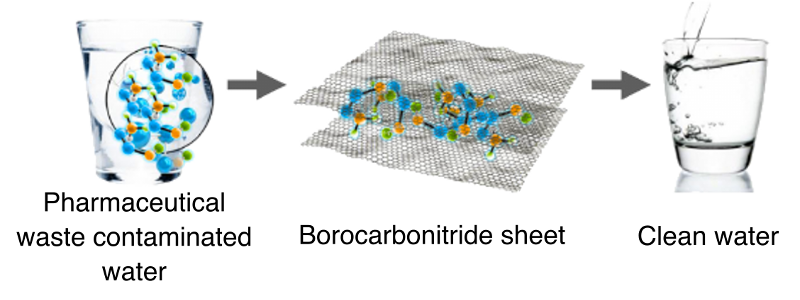

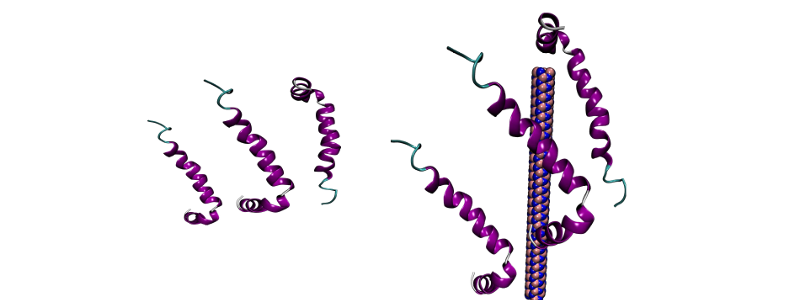

Cleaning Pharmaceutical Waste

Using molecular dynamics simulation coupled with ingeneous thinking it is found that sheets of borocarbonitride (BCN) show superior performance to adsorb aspirin in waste water as compared to graphene and hexagonal boron nitride that are popularly used for such work.

Jayank K. Singh and team, Dept. of Chemical Engineering

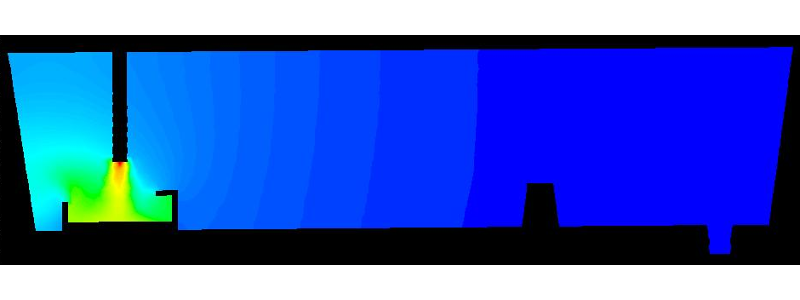

Manufacturing steel

Contours of mass fraction of steel used for modelling of steel manufacturing. Some of these models are already used in industry for better process performance. Such flows are inherently multi-dimensional and multi-phase and sometimes reacting. As a result thermal and phasic volume redistribution occur in the system. Particularly during transfer of metal from one vessel to another, moving solid regions are encountered and all these tend to make multiphase flow computation really challenging.

Deepak Mazumdar and team, Dept. of Materials Science And Engineering

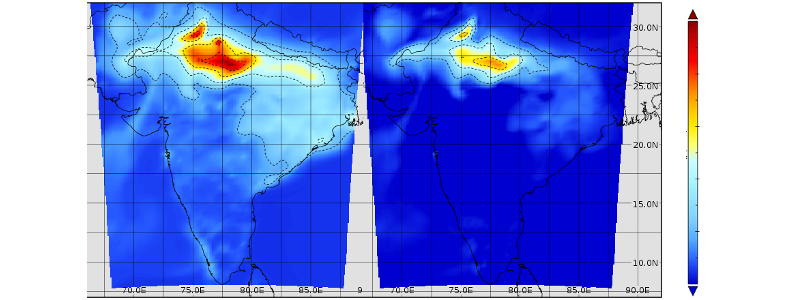

Modeling pollutants in air

For simulating the pollutant levels in ambient air, it is important to understand the transformations and depositions of different pollutants in the atmosphere. The chemistry of pollutants is very complex in the atmosphere as the reaction rates among different species vary. Thus, the model has to consider all the transformations and various processes related to physical and chemical changes. The CO levels drastically increase during biomass burning in Panjab and Haryana region and further extended in Indo-Gangetic Plain.

Mukesh Sharma and team, Dept. of Civil Engineering

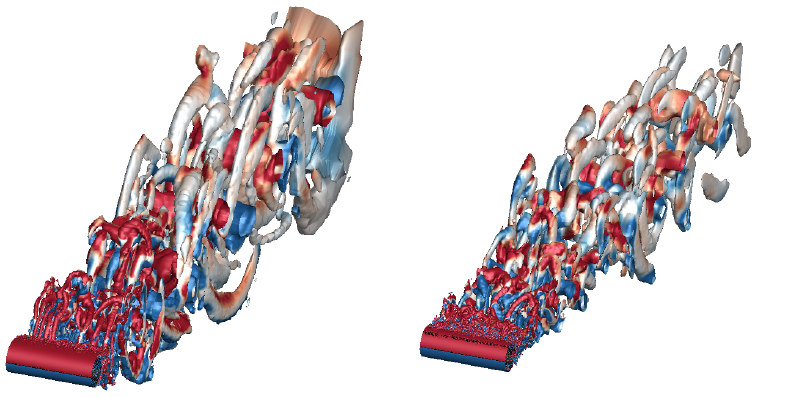

Turbulence around aeroplane

Stabilized finite element method are used to solve three-dimensional, unsteady turbulent flows past complex bodies for various fluid and material properties. These methods able to handle complex geometries including those that deform with time. These sudies are useful in understanding flow past bluff bodies, airfoils, wings, etc. Supersonic flow inside intakes and nozzles are also studied.

Sanjay Mittal and team, Dept. of Aerospace Engineering

Alzheimer Disease

Alzheimer Disease (AD) is the most common neurodegenerative disorder formed by amyloids ( aggregates of protein ) deposited in various parts of the human brain. Various studies show that the main cause of the damage of brains of the AD patients are oligomers ( molecular complex of chemicals that consists of a few repeating units). Investigation for preventing such protein aggregation is being condected here.

Amalendu Chandra and team, Dept. of Chemistry

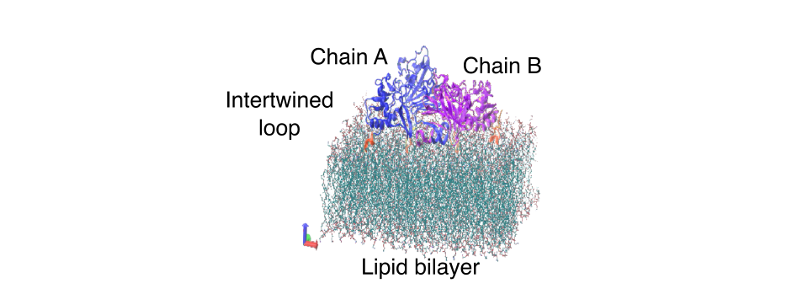

Virus pathways

Lipid bilayer are continuous flat protective membranes that cover cells. Dengue and similar virus attacks this layer to get into the cell to hijack it. This image is a snapshot of molecular dynamics simulation for a better understanding of the disease pathway to help design improved and efficient prevension strategies.

R. Sankararamakrishnan and team, Dept. of Biological Sciences and Bioengineering

Extraction of petroleum

Reactions involving Zeolites are widely used for oil and petroleum extraction. Some of the these interesting chemical reactions are investigated by the quantum mechanics/molecular mechanics program developed by their group, combining with the rare-event sampling techniques in search for better end efficient extraction technoques.

Nisanth N. Nair and team, Dept. of Chemistry

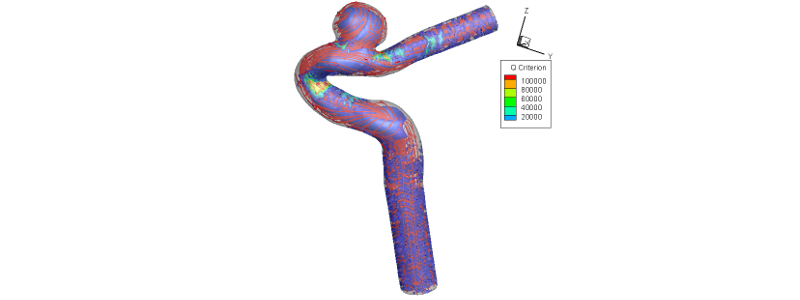

Artery issues

An aneurysm is the enlargement of an artery caused by weakness in the arterial wall. Often there are no symptoms, but a ruptured aneurysm can lead to fatal complications. Their major objective is to simulate these in patient-specific geometries using appropriate models and devellop micro-drug particles for quick recovery.

Malaya Kumar Das and team, Dept. of Mechanical Engineering

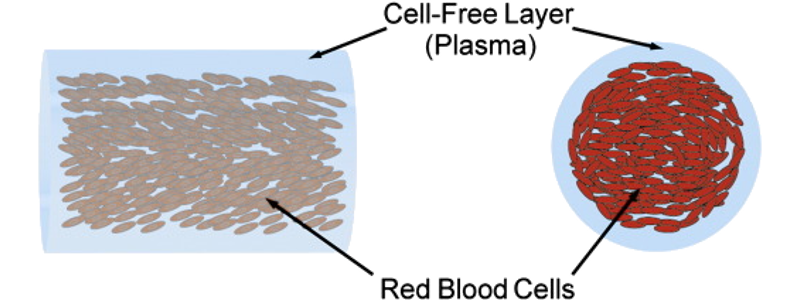

Modeling blood flow

Migration of red blood cells (RBC) towards the centre of the capillaries from the walls due to shear-induced diffusion (SID) is a recently studied phenomenon. It results in a cell-free layer near the walls of the capillaries. Blood primarily constitutes of RBC, WBC and platelets. Blood flow inside arteries and capillaries can cause WBCs and platelets to separate from RBCs and tend to move towards the wall and RBCs tend to migrate towards the centre of the arteries. With these studies the models of blood flow are being improved upon.

K Muralidhar and team, Dept. of Mechanical Engineering

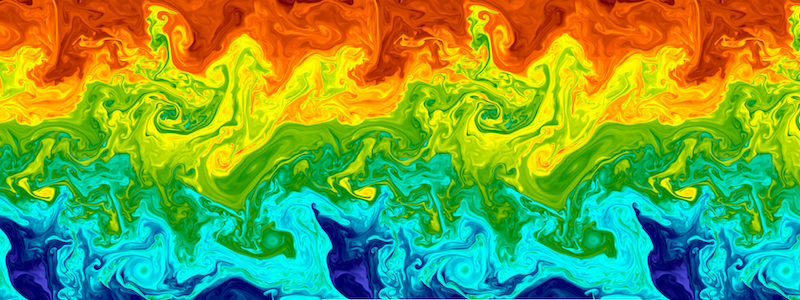

Turbulence in nature

Turbulent convection is very ubiquitous. It is found in a cup of coffee, earth's mantle, atmosphere, inside stars and also in inter-galactic regions. In such flows energy travels from one lenth scale to another. This image is a snapshot of turbulent stratified flow simulation used to study such energy transfer laws when the bottom of the fluid of cold (blue) and the top is hot (red) as found in the stratospheric region of atmosphere. The in-house program Tarang is used for such simulations.

Mahendra K Verma and team, Dept. of Physics

Details

High Performance Computing (HPC)

HPC systems at Computer Center, IIT Kanpur, are greatly facilitating high-end computation research in the country covering many areas of science and engineering. IIT Kanpur already has 2 HPC clusters, known as HPC 2010 and HPC 2013. The 372-node HPC 2010 cluster is based on Intel Xeon Quadcore processors with a total of 2944 cores and high-speed Infiniband network, and it has a peak performance of 34.5 Teraflops. The 899-node HPC 2013 cluster is also based on Intel Xeon processors and has 17980 cores in total, and peak performance of 360 Teraflops. On the infrastructural side, a modern data centre with state-of-the-art precision air conditioning and fire safety features is part of the Computer Centre.

Computer Center staff and engineers are committed to smooth running of HPC operations. As Head of Computer Center and HPC group convener, We are delighted to the see the research carried out by project investigators using the HPC system that bring laurels to the institute. We wish them more success ahead.

Prof. Nisanth Nair, DDIA

Specifications:ParamSanganak | HPC-2013 | HPC-2010

High Performance Computing (HPC)

HPC systems at Computer Center, IIT Kanpur, are greatly facilitating high-end computation research in the country covering many areas of science and engineering. IIT Kanpur already has 2 HPC clusters, known as HPC 2010 and HPC 2013. The 372-node HPC 2010 cluster is based on Intel Xeon Quadcore processors with a total of 2944 cores and high-speed Infiniband network, and it has a peak performance of 34.5 Teraflops. The 899-node HPC 2013 cluster is also based on Intel Xeon processors and has 17980 cores in total, and peak performance of 360 Teraflops. On the infrastructural side, a modern data centre with state-of-the-art precision air conditioning and fire safety features is part of the Computer Centre.

Computer Center staff and engineers are committed to smooth running of HPC operations. As Head of Computer Center and HPC group convener, We are delighted to the see the research carried out by project investigators using the HPC system that bring laurels to the institute. We wish them more success ahead.

Prof. Nisanth Nair, DDIA

Specifications: ParamSanganak | HPC-2013 | HPC-2010

HPC 2013

HPC2013 is part of high-performance computation facility at Indian Institute of Technology Kanpur and funded by the Department of Science and Technology (DST), Govt. of India. Initially, the machine had 781 nodes. Another 120 nodes were added to it in 2013-2014. This machine had a rank of 118 in the top 500 list published in www.top500.org in June 2014.

In the initial ratings, it had a Rpeak of 359.6 Terra-Flops and Rmax of 344.3 Terra-Flops. Extensive testing of this machine was carried out and we were able to achieve an efficiency of 96% on the Linpack benchmark.

System Details:

It is based on Intel Xeon E5-2670V 2.5 GHz 2 CPU-IvyBridge (20-cores per node) on HP-Proliant-SL-230s-Gen8 servers with 128 GB of RAM per node E5-2670v2x10 core2.5 GHz.

The nodes are connected by Mellanox FDR Infiniband chassis-based switches that can provide 56 Gbps of throughput and are connected by fat-tree topology. It also has 500 Terra-Bytes of storage with an aggregate performance of around 23 Gbps on write and 15 Gbps on reading.

For more information download the data sheet.

HPC 2013

HPC2013 is part of high-performance computation facility at Indian Institute of Technology Kanpur and funded by the Department of Science and Technology (DST), Govt. of India. Initially, the machine had 781 nodes. Another 120 nodes were added to it in 2013-2014. This machine had a rank of 118 in the top 500 list published in www.top500.org in June 2014.

In the initial ratings, it had a Rpeak of 359.6 Terra-Flops and Rmax of 344.3 Terra-Flops. Extensive testing of this machine was carried out and we were able to achieve an efficiency of 96% on the Linpack benchmark.

System Details:

It is based on Intel Xeon E5-2670V 2.5 GHz 2 CPU-IvyBridge (20-cores per node) on HP-Proliant-SL-230s-Gen8 servers with 128 GB of RAM per node E5-2670v2x10 core2.5 GHz.

The nodes are connected by Mellanox FDR Infiniband chassis-based switches that can provide 56 Gbps of throughput and are connected by fat-tree topology. It also has 500 Terra-Bytes of storage with an aggregate performance of around 23 Gbps on write and 15 Gbps on reading.

For more information download the data sheet.

HPC 2010

HPC2010 is part of high performance computation facility at Indian Institute of Technology Kanpur and funded by the Department of Science and Technology (DST), Govt. of India,. This machine has 376 nodes of which 368 are compute nodes. At the time of its installation, this machine had a rank of 369 in the top 500 list published in www.top500.org in June 2010.

In the initial ratings, it had a Rpeak of 34.50 Terra-Flops and Rmax of 29.01 Terra-Flops.

System Details:

It is based on Intel Xeon X5570 2.93 GHz 2 CPU-Nehalem (8-cores per node) with 48 GB of RAM per node. This cluster was later augmented with 96 nodes of Intel Xeon ES-2670 2.6 GHz (16-cores per node) with 64 GB of RAM per node that add an additional theoretical 31 Terra-Flops to the above 2010 cluster. PBS Pro is the scheduler of choice. Though it has FDR Infiniband cards it is connected to the QDR Infiniband fabric seamlessly through fat-tree topology.

The nodes are connected by Qlogic QDR Infiniband federated switches that can provide 40 Gbps of throughput. It also has 100 Terra-Bytes of storage with an aggregate performance of around 5 Gbps on write performance.

For more information download the data sheet.

HPC 2010

HPC2010 is part of high performance computation facility at Indian Institute of Technology Kanpur and funded by the Department of Science and Technology (DST), Govt. of India,. This machine has 376 nodes of which 368 are compute nodes. At the time of its installation, this machine had a rank of 369 in the top 500 list published in www.top500.org in June 2010.

In the initial ratings, it had a Rpeak of 34.50 Terra-Flops and Rmax of 29.01 Terra-Flops.

System Details:

It is based on Intel Xeon X5570 2.93 GHz 2 CPU-Nehalem (8-cores per node) with 48 GB of RAM per node. This cluster was later augmented with 96 nodes of Intel Xeon ES-2670 2.6 GHz (16-cores per node) with 64 GB of RAM per node that add an additional theoretical 31 Terra-Flops to the above 2010 cluster. PBS Pro is the scheduler of choice. Though it has FDR Infiniband cards it is connected to the QDR Infiniband fabric seamlessly through fat-tree topology.

The nodes are connected by Qlogic QDR Infiniband federated switches that can provide 40 Gbps of throughput. It also has 100 Terra-Bytes of storage with an aggregate performance of around 5 Gbps on write performance.

For more information download the data sheet.